Real-time optical Motion Capture (MoCap) systems have not benefited from the advances in modern data-driven modeling.

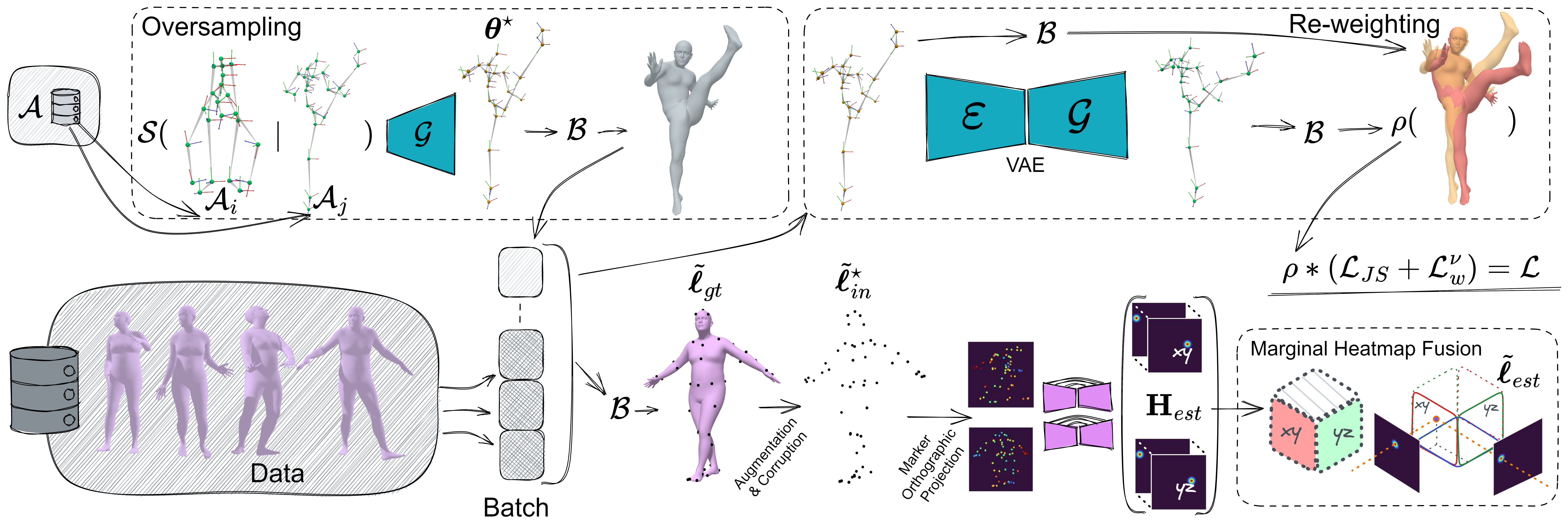

In this work we apply machine learning to solve noisy unstructured marker estimates in real-time and deliver robust marker-based MoCap even when using sparse affordable sensors. To achieve this we focus on a number of challenges related to model training, namely the sourcing of training data and their long-tailed distribution. Leveraging representation learning we design a technique for imbalanced regression that requires no additional data or labels and improves the performance of our model in rare and challenging poses. By relying on a unified representation, we show that training such a model is not bound to high-end MoCap training data acquisition, and instead, can exploit the advances in marker-less MoCap to acquire the necessary data.

Finally, we take a step towards richer and affordable MoCap by adapting a body model-based inverse kinematics solution to account for measurement and inference uncertainty, further improving performance and robustness.

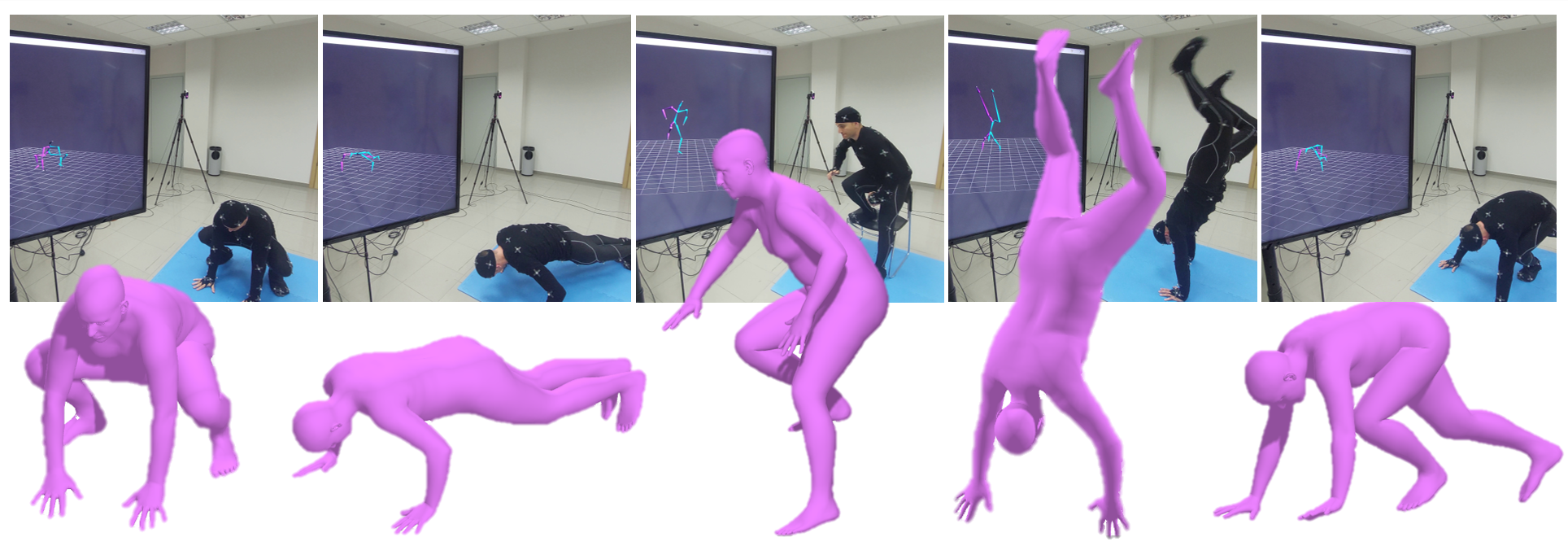

The generative and disentangling nature of modern synthesis models shape manifolds that map inputs to the underlying factors of data variation, effectively mapping similar poses to nearby latent codes which can be traversed across the latent space dimensions. Using two anchor poses we use SLERP to select a code in-between them and generate plausible poses.

Start Frame

End Frame

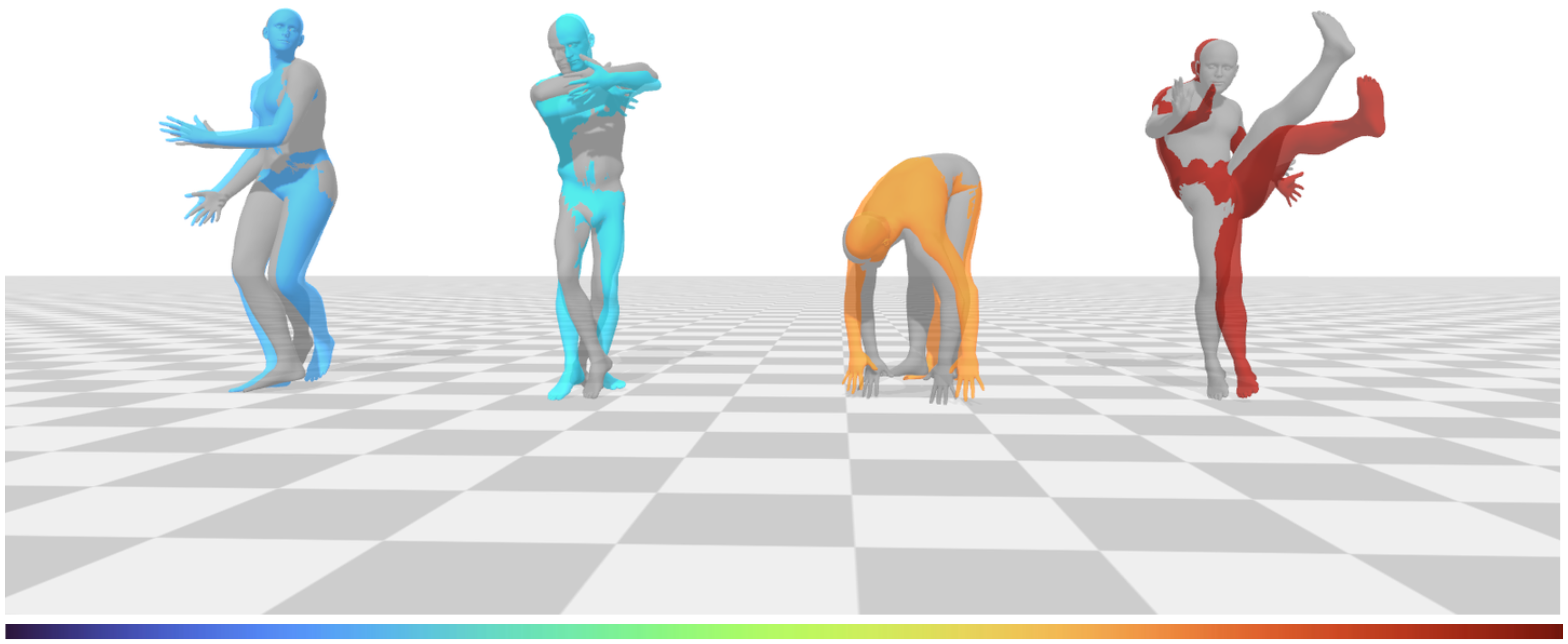

Tail poses are hard to reconstruct accurately. We exploit this bias in pose reconstructability and transform it to a confidence value through a relevance function. From the investigated functions we opt for the exponential one - normalized by a scaling factor σ - which assigns higher penalties to the worst reconstracted poses. We use turbo colorization to color-code the assigned penalty (weight) as you can see below. The depicted samples belong to the AMASS dataset [1].

We train our real-time MoCap model by balancing the biased data distribution with a combination of oversampling through synthesis and re-weighting relevance based on sample rarity. Our convolutional model is able to estimate 3D landmarks through learning to solve a 2D task, exploiting the maturity of structured heatmap representations. The estimated markers and joints are used as input to our robust solver.

Instead of labeling the 3D landmark positions as in SOMA [2], our solver fits a parametric model (we use SMPL [3]) to the esimated (thourgh regression) values to obtain the articulated skeleton and the mesh surface of the input sample. Our solver assumes noisy input from off-the-self sensors and is trained with an adaptive noise-aware fitting objective. Compared to existing solutions like MoSh [4], our method is more robust to in-the-wild captures by affordable sensors.

| Regression vs. Labeling |

|---|

| Noise-aware vs. Plain Fitting |

Left: Fits to our regressed (purple) vs SOMA labeled (orange) markers, compared with ground truth meshes (gray). Right: Our noise-aware approach vs. MoSh Euclidean distance from the ground truth mesh (colorized with 'jet' colormap).

@inproceedings{albanis2023noise,

author = {Albanis, Georgios, and Zioulis, Nikolaos, and Thermos, Spyridon, and Chatzitofis, Anargyros and Kolomvatsos, Kostas.},

title = {Noise-in, Bias-out: Balanced and Real-time MoCap Solving},

booktitle = {Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV) Workshops},

url = {https://moverseai.github.io/noise-tail/},

month = {October},

year = {2023}

}