Solving Neural Articulated Performances

Dynamic Human Performances#

a digitization challenge

Humans move in distinctly varying ways depending on the context and surrounding environment.

Human motion exploits and engages with the space around us to convey thoughts and emotions, perform actions, play instruments or express intentions.

Body motion is the common denominator to all these activities.

Our motions mix coarse limb motion, fine grained finger manipulation, and facial expressions.

Image of a group performance by Ahmad OdehEven though performances vary, our flexibility and adaptability in motion allows us to fluently transition from one type of motion (e.g. sports) to another (e.g. dance).

We blend and mix motion types, showing emotions while dancing or playing sports.

This high-dimensional transition space renders human performance capturing and digitization very challenging.

Using objects, clothing and hair in said performances significantly expands capturing complexity.

Additionally considering simultaneous and/or interacting performers, as well as (social) group dynamics, makes the challenge and complexity scale exponentially.

But nowdays we are witnessing another exponential curve, a technological one.

Neural Graphics & Vision#

the new continuum

Reconstruction and simulation are the two ends of a digital thread we use to make sense of our physical world.

On one end, computer vision uses sensors to reconstruct our surroundings, whereas, on the other end, computer graphics rely on models to simulate them.

For years, the reconstruction end has lagged behind simulation which has been producing impressive visual effects, virtual worlds and cinematics.

But nowadays, the recent advances in machine learning have improved machine perception.

It is now possible to understand our environments and reconstruct their various layers.

These span from lower level ones like 3D geometry and appearance to higher level ones, like semantics and even affordances.

Said advances are also affecting the other end, enabling simulation technology that is more efficient.

This works in tandem with the reconstruction progress, creating a virtuous cycle.

It used to be that engineers and scientists worked at the intersection of graphics and vision.

Neural techniques are now tying the knot, connecting these two ends.

As a result, novel techniques are emerging, allowing us to traverse the graphics-to-vision continuum seamlessly, and opening up new pathways to explore.

Richer Assets#

the real in hyper-realistic

High quality character performances are produced for film, visual effects and game cinematics.

Technologies like animation, deformation and physically-based rendering are the driving forces of such compelling content.

Still, these need immense human effort - and also, artistry - to create, and have been enabled by real-time editing tools, themselves being aggregations of many technical advances.

Animated 3D Asset by Jungle JimUp to now this has been solely on the simulation side of the continuum. It is almost a decade ago that we started reconstructing production-grade human performances. Microsoft’s Mixed Reality Capture Studio Technology 1 (now at Arcturus), 4DViews, Volucap and Volograms delivered impressive 4D capture systems.

Still, recent advances exhibit new potential to extend capturing to deformations, thin structures, and even effects not possible before.

The earlier works of 20212 and 20223 using implicit neural fields achieved human capture with body pose or time dependent deformations.

Following up, more recent works are starting to reconstruct and simulate complex cloth4 dynamics, as well as hair 5:

| Cloth | Hair |

|---|---|

Another new capability emerging from the use of neural rendering and radiance field techniques is the capture of effects from participating media. These enable the capture of lighting variations and reflections:

Further, these techniques can drive the capturing of subsurface scattering6, smoke and clouds7, or scenes through fog8 and water9!

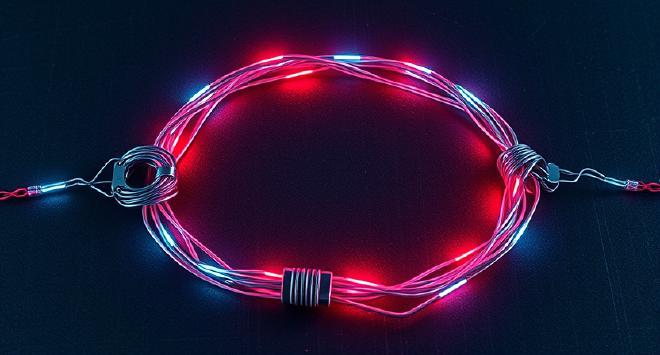

Examples being the capture of volumetric effects such as fire

and lights

, now possible, as shown by the creative Gaussian Splatting works of Stéphane Couchoud and Eric Paré respectively:

| Fire | Lights |

|---|---|

Evidently, we are reaching a critical point where the combined progress driven by AI and differentiable rendering will consolidate into human capturing technology that delivers much richer assets.

Assets that disentangle the various appearance, geometry, deformation and animation layers, maximizing editability and control. More importantly, this should be possible while simultaneously downscaling the capturing requirements from hundreds of cameras and controlled environments, to portable and/or mobile systems deployed in-the-wild.

Live Representations#

holograms, my young Padawan

Tele-presence promises to elevate real-time collaboration and communication beyond contemporary audio-visual systems.

Considering its digital nature, it has the potential to surpass the “golden standard” of face-to-face communication.

Up to now systems like Microsoft’s Holoportation and Google’s Starline spearhead tele-presence technology.

The requirement of interaction level latency makes said systems very complex.

It is a huge pain point as it applies end-to-end, extending beyond capturing to transmission and rendering.

The chosen 3D representation changes the design surface of any real-time telepresence system.

This includes the capturing setup and upstream bandwidth; the capacity and performance of the server and client components for encoding/decoding; rendering, and/or spatial tiling/level-of-detail support.

All these influence latency and up/downstream bandwidth, as well as the fidelity of the reconstruction and resulting QoE.

While research efforts started focusing on new forms of compression10, and streamability11, animateable radiance field based representations pose as a promising solution to better manage the underlying trade-offs.

Using such drivable representations, streaming lightweight animation data can control a deformable representation.

This alleviates the need to transmit dense visual representations and only pay a one-off cost for the drivable representation.

Compared to contemporary skinned animation, it can also support pose-controlled deformations, conveying the necessary realism.

The video above shows Meta’s Codec Avatars, a technology that has been focusing on exploring the advantages of real-time avatar drivability12,13.

So … SNAP!#

a new way ?

Animatable human digital representations consolidate human reconstruction and simulation.

They can jointly serve as the technical backbone for holistic performance capture, rich 3D human assets and efficient tele-presence.

SNAP is a project that seeks to explore the synergies between human motion and appearance capture as enabled by the neural graphics and vision continuum.

The tools are numerous, ranging from explicit parametric body models, to implicit fields, differentiable rendering and efficiently optimized gaussian splats.

From an end goal perspective, animatable humans based on neural fields and/or gaussian splats require high quality motion capture.

But once a simulation ready digital radiance field avatar of an actor is reconstructed, one that can deform clothing and respect lighting variations as the body moves, or even move hair; then it can also be used to solve for complex human performances using solely the raw image data.

Taking into account that markerless motion capture and human radiance field technologies are developed for the same acquisition system - color camera(s) - the interplay between these two technologies is significant and worth exploring.

References#

High-Quality Streamable Free-Viewpoint Video, SIGGRAPH 2015 ↩︎

Reloo: Reconstructing humans dressed in loose garments from monocular video in the wild, ECCV 2024 ↩︎

NeRSemble: Multi-view Radiance Field Reconstruction of Human Heads, SIGGRAPH 2023 ↩︎

Subsurface Scattering for 3D Gaussian Splatting, NeurIPS 2024 ↩︎

Don’t Splat your Gaussians: Volumetric Ray-Traced Primitives for Modeling and Rendering Scattering and Emissive Media ↩︎

ScatterNeRF: Seeing Through Fog with Physically-Based Inverse Neural Rendering, ICCV 2023 ↩︎

Gaussian Splashing: Direct Volumetric Rendering Underwater ↩︎

Neural Residual Radiance Fields for Streamably Free-Viewpoint Videos, CVPR23 ↩︎

Drivable Volumetric Avatars using Texel-Aligned Features, SIGGRAPH 2022 ↩︎

Driving-Signal Aware Full-Body Avatars, ACM ToG 2021 ↩︎