DeblurAvatar

Deblur-Avatar: Animatable Avatars from Motion-Blurred Monocular Videos#

Xianrui Luo, Juewen Peng, Zhongang Cai, Lei Yang, Fan Yang, Zhiguo Cao, Guosheng Lin

Splats

SMPL

arXiv 2025

Abstract#

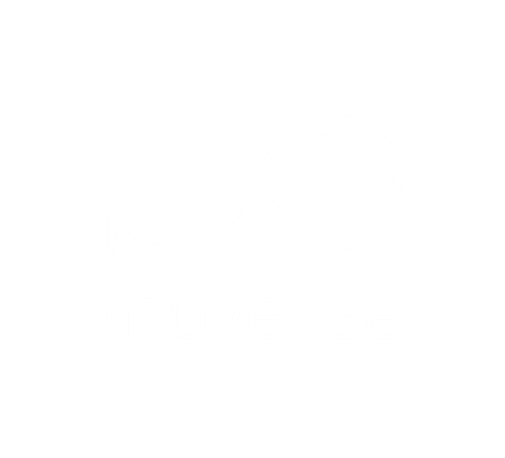

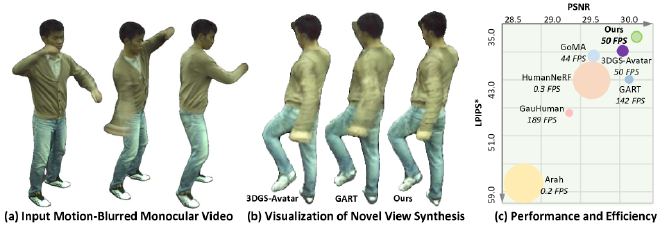

We introduce a novel framework for modeling high-fidelity, animatable 3D human avatars from motion-blurred monocular video inputs. Motion blur is prevalent in real-world dynamic video capture, especially due to human movements in 3D human avatar modeling. Existing methods assume sharp inputs, neglecting the motion blur in animatable avatars and failing to address the detail loss introduced by motion. Our proposed approach integrates a human movement-based motion blur model into 3D Gaussian Splatting. By explicitly modeling human motion trajectories during exposure time, we jointly optimize the trajectories and 3D Gaussians to reconstruct sharp, high-quality human avatars. We employ a pose-dependent fusion mechanism to distinguish moving body regions, optimizing both blurred and sharp areas effectively. Extensive experiments on synthetic and real-world datasets demonstrate that our method significantly outperforms existing methods in rendering quality and quantitative metrics, producing sharp avatar reconstructions and enabling real-time rendering under challenging motion blur conditions.

PaperApproach#

DeblurAvatar overview.

DeblurAvatar details.Results#

Data#

Comparisons#

Performance#

Papers Published @ 2025 - This article is part of a series.

Part 4: This Article